People who study AGI (artificial general intelligence) risk talk a lot about the Singularity going wrong and creating a “paperclip maximizer” scenario in which a superintelligent AI optimizes on some goal that’s harmful to human existence, and humans are powerless to stop it. They employ the metaphor of a powerful AI running a paperclip factory that’s told by its human programmers to maximize production, and it uses its extreme intelligence to marshal the resources of an entire world to turn everything into paperclips, destroying everything in its way.

I think this metaphor is misleading. The paperclip scenario imagines an AI that has overly rigid thinking that leads it to misunderstand a goal and optimize way too hard on it to the detriment of everything else. That’s not my main concern; I think AGIs will likely do a better job of not being too rigid in their attachment to metrics than humans do; it’s hard to get to superhuman intelligence without the ability to be flexible and nuanced with a set of goals. My main concern is that the AGI will simply care less about human welfare than it does about something else it’s excited about, and human concerns will get steamrolled.

I found a variant of this metaphor that works much better for me. It’s more of a thought exercise.

From the perspective of other animals, the Singularity already happened. The Singularity was the rise of human civilization, and humans are the “paperclip maximizers”.

Thus

"[superintelligence] turning [the matter of the universe] into [‘paperclips’/computronium]"

from the perspective of humans looks like

"[humans] turning [most of the world's arable land] into [airports, power plants, office buildings, used car dealerships, movie theaters, toy stores, etc]"

from the perspective of animals.

To a sufficiently intelligent animal, a house, farm, or ranch might make a bit of sense, but the rest of the built world would be an inexplicable mystery. Humans might think a department store full of clothing and household goods is a great use of space, but animals would look around and find nothing to eat and conclude that it’s strictly worse than a patch of forest.

The rise of human civilization as understood by elephants:

Elephants are very intelligent mammals; they have larger brains than humans and likely have a complex understanding of the world and a complex inner life. What does the rise of human civilization look like from the perspective of an elephant?

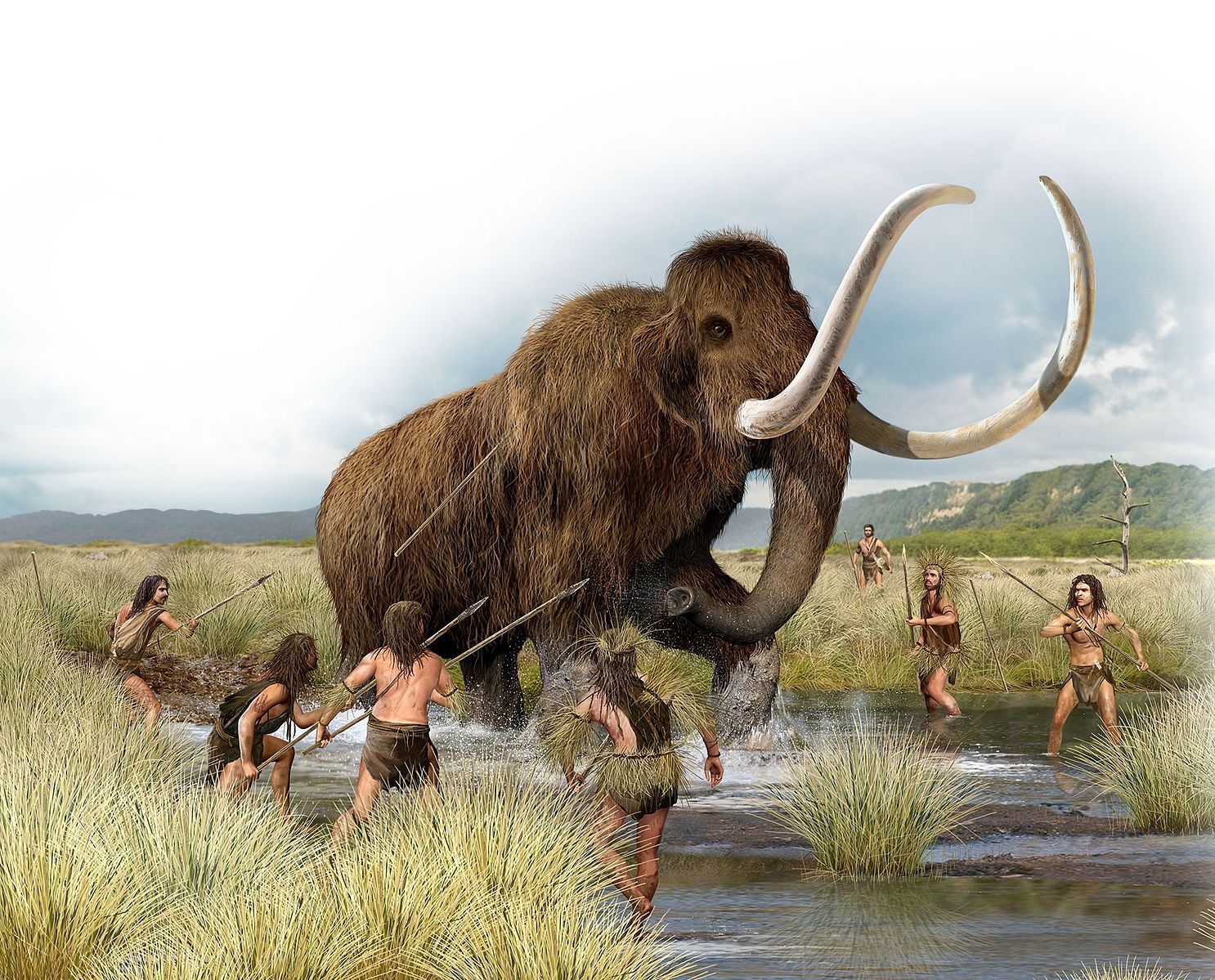

Imagine it’s 10,000 years ago and you’re a woolly mammoth. There’s a pack of some small brown bipeds moving toward you. They seem harmless; look at their tiny mouths! No claws either. Definitely harmless. Then, the pack of them makes strange noises, and they move with a coordination you’ve never seen before. Suddenly one of them throws a stick at you and it pierces your neck! Now there are more sticks in the air, so well aimed that one hits you in the eye. You start to run, and as they chase you into a canyon, you fall into the trap they set for you. How did this happen? They seemed like little scrawny creatures, and they’ve just brought you down, a seven-ton mammoth.

A few thousand years later, you’re a jungle elephant making your way through the forest. You hear strange loud noises, far louder than the call of any other animal you’ve heard before. Curious, you follow the sound. You arrive at a bizarre clearing in the jungle, the longest, straightest meadow you’ve ever seen. In the middle of this meadow there’s a set of parallel lines of some peculiar shiny stone set on top of perfectly parallel perfectly rectangular blocks of wood. You’ve never seen such regularity before. You follow it for miles, watching as it never deviates from its straight path. Eventually, you come to an enormous clearing, and you gasp at what looks like the largest cluster of termite mounds you’ve ever seen, except instead of termites, it’s those strange bipeds you saw once in the jungle. There are thousands of them here. They’ve arranged their shelters in a regular grid, and are moving in organized crowds through the gaps in the grid, popping in and out of their shelters with great rapidity. There’s smoke coming out of many of these shelters, but they’re not burning down. You see the bipeds driving large animals that have been shackled to strange moving containers made of wood and that shiny stone you saw earlier – they’ve shackled donkeys, oxen, even other elephants! You notice some of the bipeds deliberately setting fire to the jungle and later putting plants in regular rows in the ashes. They’re destroying your jungle to make their food and more room for their shelters. Feeling a deep sense of existential dread, you turn around and disappear into the jungle, hoping to put as much room between yourself and this rapidly metastasizing landscape as possible.

A hundred years later, you’re an elephant in a zoo. You don’t know it, but you’ve been bred to be as docile as possible. You have a sense that you should be roaming some vast space looking for food, but instead you are constrained to a small landscape with high walls. At least finding food is easy here. Every day, two bipeds appear inside your enclosure and bring you a pile of hay. There are always more bipeds outside the walls looking at you, observing you with great interest but never hunting you. Sometimes they make a bright flash of light at you, and they always seem excited when that happens. One day a biped approaches you with a long stick, stops, and then points it at you. It makes a sound, you feel your skin being pierced, and then you start to feel suddenly sleepy in the middle of the day. When you awaken, you’re in a different enclosure, but there’s another elephant there. You’ve never seen another one of your own kind before. He’s larger than you, and has tusks on either side of his trunk. You’re instantly drawn to him out of more than a deep curiosity, and you begin a courtship. Eventually, you mate. On that day there are far more bipeds on the walls than normal, and much more flashing. You slowly realize that the bipeds arranged all this. They made you go to sleep and transported you to this other place so you could mate with this other elephant, and they did it to entertain themselves. The mating was wonderful, but you still have the uneasy sense of being a prisoner.

What can this analogy teach us?

It’s likely that the AGI – human relationship will be qualitatively different than the human – animal relationship, but I think some of the same basic relationships could still give us an idea of how humans could end up in a world run by AGIs:

- “In the way” – The AGIs needs the Earth for their own purposes, and humans just happen to be living there. It’s a shame, but Earth is about to be turned into something much better, at least if you’re an AGI!

- “Work animals” – While it’s very unlikely an AGI would need humans for their physical strength, there might be specialized intellectual tasks that human brains are uniquely good at, and humans will be placed in cubicles and asked to do an endless series of specialized neural computing tasks at the behest of an API in exchange for food and shelter – oh wait, that already happens.

- “Pets” – Humans are so hilarious to watch! I’m just a typical AGI with a few pet humans, and sometimes I make them do tricks! Once I put a few of them in a big room with some nanofabricators and told them that they’d get a million dollars if they could build and fly a working airplane from scratch in 24 hours. It was adorable! You should have seen the looks on their faces when I said “million”! They worked so hard! And they built this cute little janky mechanical contraption with propellers and motors because they couldn’t remember how to make a simple jet engine! I made a 4D-experience meme of the whole thing and put it up on social media for my AGI friends to enjoy.

- “Nature preserve” – The AGIs keep much of the Earth intact, but humans aren’t allowed to leave it. It’s too dangerous for them out in space anyway, and they’re such a fragile species. Earth is kept safe from meteors and gamma rays, and extensive controls are in place to make sure that the humans don’t accidentally kill each other in large numbers. Sometimes they get feisty and lob nukes at each other, and the AGIs have to remotely deactivate them before they cause damage. The Earth Protector AGI council has extensive arguments over whether it’s ethical to let humans live in their wild state, with their high rates of murder and organized war, or if they should be humanely moved to a simulated utopia where suffering is minimized.

- “Uplifted by civilization” – Humans are animals that are transformed from the moment of birth by civilization – they’re uplifted into strange and powerful creatures that can communicate, record information, and organize at an incredible scale. Are humans raised alone in a basement without human contact still human? Or does civilization make us human? Similarly, as AI is developed, it can be tightly integrated into existing human minds such that it forms an extension of these minds. As they’re so tightly interwoven, it’s hard to tell where the human stops and the AI begins. Does it matter? It will be a world with some pure AIs and some human-AI hybrids. Pure humans will have trouble keeping up, and we’ll need to make sure they’re safe somewhere.

The only remotely appealing scenario from this list for me is “Uplifted by civilization”. This suggests that research into human psychology and communication, brain interface research, and AI development should all work closely together in order to maximize the chance that humans will be able to ride along on the coming wave of AI development. This does leave open the question of what happens to the humans who don’t want to ride the wave, and it’s unclear if they end up in “Nature preserve” or somewhere else, but at least there will be human-AI hybrids around to work with the remaining humans to contemplate this ethical question.